Low-level programming languages are integral to computer science as they operate close to the machine level, allowing programmers to write code that runs directly on the hardware with minimal abstraction. They stand in contrast to high-level languages, which are more abstracted from the computer’s hardware and architecture. A prominent example of a low-level language is assembly language, which uses mnemonics to represent machine-level operations, translating directly into machine code – the binary instructions that the processor understands.

Understanding the fundamentals of computer operations requires an insight into the machine cycle. The machine cycle consists of four steps: fetching the instruction, decoding the instruction, executing the instruction, and storing the result. This cyclical process is vital for the computer to perform tasks efficiently. Furthermore, when discussing computer hardware, the basic unit of storage is the byte, while the physical housing that contains most of the computer’s core components is commonly referred to as the system unit or chassis. To maintain optimal performance and longevity for computer equipment, it’s essential to keep them in an environment with a humidity range between 45% and 60%.

At the core of a computer’s start-up procedure is the boot process, where the BIOS or firmware interface initializes and tests the system hardware components and loads the operating system. It’s the first software that must start when a computer is turned on. Connecting a computer to a network, the role of devising the pathway for sending and receiving data packages falls on the network interface card (NIC), while the central processing unit (CPU) takes on the responsibility of executing program instructions. Input devices like the keyboard, mouse, and scanner are fundamental components that send data to a computer.

Key Takeaways

- Low-level programming languages are essential for direct communication with computer hardware.

- The machine cycle is crucial for the execution of instructions in a computer system.

- Keeping computer equipment within the right humidity range ensures its reliability and longevity.

Understanding Low Level Programming Languages

Low level programming languages serve as a critical interface between human-readable code and the machine instructions that a computer’s CPU executes. They are foundational for understanding how software controls hardware.

Defining Low Level Programming Language

Low level languages, such as assembly language and machine code, are closer to the hardware than their high level counterparts. They are typically categorized into two types: the first is machine code, which consists entirely of binary data understood directly by a computer’s CPU. The second is assembly language, which uses abbreviations (mnemonics) to represent machine code instructions. Assembly offers slightly more abstraction and is translated into machine code by an assembler.

The Role of Assemblers and Compilers

Assemblers are a type of compiler specifically used for converting assembly language into executable machine code. Compilers, on a broader scale, transform code from one programming language (e.g., a high-level language) into another (e.g., a low-level language like assembly or machine code). These tools perform a crucial step in the process of preparing a program to run on hardware.

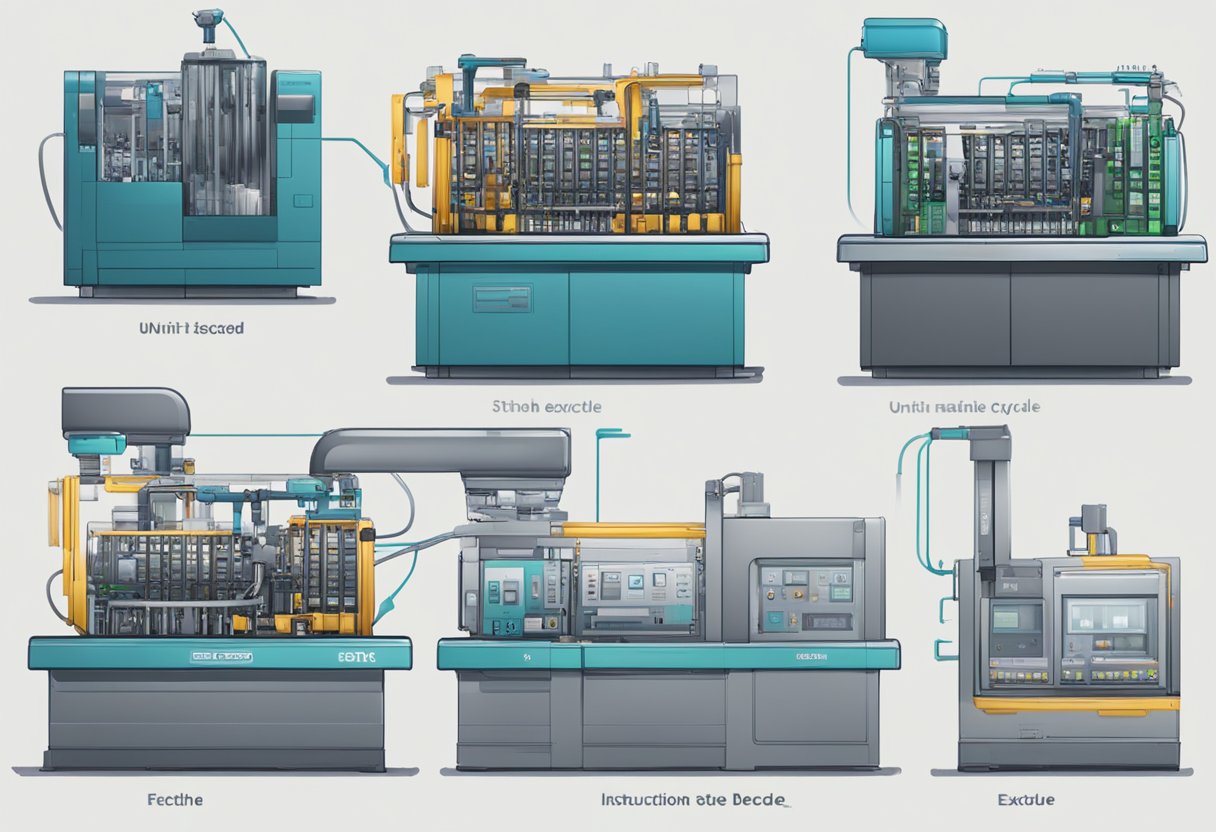

Execution Semantics and the Machine Cycle

The execution semantics of low-level languages relate directly to the machine cycle, a four-step process essential to computer operations. The cycle begins with the fetch step, where an instruction is retrieved from memory. Next is decode, where the instruction is converted into signals. The execute step follows, where the decoded instructions are carried out. Lastly, the store step completes the cycle by writing back the results to memory. This cycle is handled by the CPU and is carried out in sequences where each instruction is taken from a storage medium in binary, hexadecimal, decimal, or octal form to be executed.

The Machine Cycle

The machine cycle is integral to the functioning of the Central Processing Unit (CPU), as it describes the process by which a computer executes instructions.

Role of the Processor

The processor, or CPU, is the brain of the computer responsible for carrying out all instructions through a series of steps known as the machine cycle. It operates using an instruction set, which is a group of commands understood by the CPU.

Steps in the Machine Cycle

- Fetch: The CPU retrieves an instruction from the main memory using the program counter, which keeps track of the next instruction to process.

- Decode: The instruction that has been fetched is then decoded and interpreted by the CPU. The decoding process figures out what action is required.

- Execute: The CPU’s Arithmetic Logic Unit (ALU) performs the necessary calculations or processes the data as per the instruction.

- Store: Finally, the result of the execution is stored back in the main memory or a register.

This cycle—fetch, decode, execution, and store—repeats for every instruction until the program is completed. Each step is crucial for the efficient operation of the CPU, enabling it to process complex tasks in a systematic way.

Computer Hardware Essentials

The core components of computer systems are designed to efficiently store, process, and transfer data. Understanding these components and their functions are fundamental to grasping how computers operate.

Primary Storage and Memory Units

The basic unit of storage in a computer is the byte. Bytes store individual pieces of data in a format dictated by the binary system, which uses ones and zeros to represent information. RAM, or random-access memory, is a crucial component as it serves as the primary workspace for the computer processor, holding data that is actively being used. This memory has a direct impact on the system’s memory footprint, influencing how fast a system can access and process instructions.

The Central Processing Unit and Its Functions

Referred to as the “brain” of the computer, the Central Processing Unit (CPU) is responsible for executing program instructions. The efficiency of a CPU is determined by its hardware architecture and its clock speed, measured in gigahertz (GHz). The CPU performs four basic steps in the machine cycle: fetch, decode, execute, and store, thus processing instructions to carry out computer programs.

Computer Ports and Connectors

A variety of ports and connectors are used to extend the functionality of computer hardware. The HDMI port, for instance, transmits audio and video signals without the need for compression. For peripheral devices like a mouse, modern PCs typically use a USB connector. Additionally, each computer has a front panel, which usually includes ports like USB, audio, and sometimes video connections for quick access.

The Language of Computers

Low-level programming languages are the foundational languages that bridge the gap between a computer’s hardware and higher-level languages. They offer minimal abstraction and allow for direct control over hardware.

Assembly Language and Mnemonics

Assembly language leverages mnemonics, which are short codes or words representing machine instructions. These mnemonics are utilitarian, making it easier for programmers to remember and write the instructions necessary for hardware control. For example, MOV, ADD, and SUB are common mnemonics used in assembly for moving data, adding, and subtracting values, respectively. Heavily tied to a system’s architecture, every assembly language is unique to the machine’s instruction set, known as the opcode.

Assembly languages utilize hexadecimal numbering, which is base-16. This system simplifies binary representation and is closer to human-friendly notation. Hexadecimal numbers are often employed when dealing with memory addresses, which are crucial for pinpointing data within the computer’s memory.

Machine Language and Binary

At the core of low-level programming is machine language, the set of binary instructions that a computer’s CPU executes directly. Machine instructions are pure binary data, and they form the most fundamental level of programming possible. In these terms, the binary code could be seen as a sequence of 0s and 1s, such as 11010011, which the processor interprets and acts upon without any need for translation.

Developers occasionally debug low-level code, meticulously examining binary and hexadecimal representations to isolate errors within the machine instructions. Given its complexity and its direct correlation with hardware architecture, machine language is used less frequently by humans for coding, having been largely replaced by assembly language for such low-level tasks.

Programming Abstractions and Efficiency

Understanding the trade-offs between programming abstractions and efficiency underpins the performance of computer systems. Choices in software design and language selection greatly impact how effectively a computer executes tasks.

High-Level vs Low-Level Paradigms

High-level programming languages such as Java and Python provide strong abstraction layers between the code developers write and the machine’s hardware. These abstractions allow programmers to focus on solving complex problems without worrying about the underlying machine-specific details. However, these conveniences can come with a performance cost due to less direct control over hardware.

On the other hand, low-level programming languages like Assembly and C sit closer to the hardware. They give developers more control to optimize for efficiency, at the cost of greater complexity and longer development times. C is particularly notable for striking a balance, offering closer hardware interaction while maintaining some elements of high-level programming.

Optimization and System Efficiency

Efficiency in computer programs is intricately linked to the level of optimization achievable by the language in use. Low-level programming languages are typically faster and more efficient because they allow for fine-tuned performance optimizations. For instance, Assembly language is used when the utmost execution speed and memory optimization are required since it translates directly to machine code which the processor can execute with minimal overhead.

High-level languages, with their more readable and maintainable code, often sacrifice a degree of speed and memory efficiency. However, modern high-level languages have sophisticated compilers and environments that perform various optimizations to close the efficiency gap. For example, the Just-In-Time (JIT) compilers in Java can dynamically translate high-level code to optimized machine code at runtime.

The consideration for abstraction versus efficiency is paramount in the development of computer software. Unnecessarily high abstraction can degrade performance, while too low-level of an approach can lead to complex and error-prone code. The choice of programming language and paradigm must be informed by the specific requirements and constraints of the project, balancing the need for abstraction against the demands for efficiency.

Storage and Data Representation

Understanding how a computer stores and represents data is fundamental to grasping how computers operate. The intricacies of data management are foundational to computing, involving units of storage and methods for extending memory capacity.

Basic Units of Storage

The byte is the most basic unit of storage in a computer system, traditionally representing a single character of data. It is composed of 8 bits, where each bit is a binary digit that can be either 0 or 1. This binary numbering system is the basis for all computer logic, where sequences of bits representing binary, hexadecimal, decimal, and octal values can be interpreted and manipulated by the computer to perform operations.

Coding systems, like ASCII and Unicode, use these bytes to represent characters, allowing computers to store text. The hexadecimal system is often used in programming as it provides a more human-readable form of binary-coded data. For instance, a single hexadecimal digit can represent four binary digits, thus making it simpler to understand and communicate the values stored in memory.

Auxiliary Storage Devices

Auxiliary storage devices serve the crucial role of maintaining data persistency, storing data even when the computer is powered off. These devices, such as hard drives, solid-state drives, and USB flash drives, are essential for the long-term retention of large volumes of data. They extend the limited capacity of a computer’s main memory and can be used to back up important information or transfer data between different systems.

Data on these storage devices is organized in a hierarchical file system, often depicted in a directory tree structure. The efficiency and speed of data retrieval can depend on the device’s technology—for instance, solid-state drives are faster than conventional hard disk drives because they have no moving parts and access data electronically.

Auxiliary storage is available in various forms, each suitable for different needs and uses. It allows users to keep extensive archives and libraries that far exceed what main memory can hold, critically supporting the vast data requirements of modern applications.

Computer Start-Up Processes

When a computer is powered on, the central process that begins is known as bootstrapping or simply bootstrap. This critical start-up sequence initiates the loading of the operating system (OS) into the computer’s memory. The first software to start is the Basic Input/Output System (BIOS), which checks and initializes all connected hardware such as the hard drive, keyboard, and display.

Power-On Self-Test (POST)

- Upon start-up, the BIOS conducts the POST to verify the system’s hardware integrity.

Detection of Boot Device

- BIOS identifies the bootable device from which the OS will be loaded.

OS Loading

- After a successful POST and boot device detection, BIOS hands over control to the OS stored on the boot device.

During this process, system drivers are loaded, enabling the OS to communicate with the hardware components. Drivers are specific pieces of software that act as translators between the operating system and the hardware. Without the proper driver, the component might not function correctly or at all.

- Interpreter

- Some boot processes may involve an interpreter for an intermediate stage in the OS loading phase.

The OS is loaded into the computer’s main memory or Random Access Memory (RAM), after which the computer is ready for user interactions. This sequence ensures that the system’s hardware is ready and capable of executing software instructions, allowing for the interaction between user and machine.

Throughout this start-up process, proper interpretation of instructions is crucial for hardware and software synchronization, leading to a responsive and fully functional computer system.

Networking and Data Transmission

Networking and data transmission are essential for the flow of information between computers. Understanding network connections and how multimedia data is transferred is crucial for efficient and effective communication.

Identifying Network Connections

To facilitate communication between computers, it is imperative to understand network connections. The default gateway is a crucial component, typically a router on a network that serves as an access point to other networks. It is integral in ensuring data is sent correctly through networks. Moreover, network connections involve various protocols and standards, which are necessary for seamless interaction between devices.

Transmission of Multimedia via Computer Ports

The HDMI port is adept at transmitting audio and video signals without the need for compression, ensuring the integrity of the multimedia data. Additionally, the role of device drivers is to act as translators between the operating system and hardware devices, crucial for the recognition and proper use of computer ports and peripherals.

Input Devices and Components

Input devices are integral to the operation of a computer, allowing users to interact with and provide data to their devices. The keyboard is often considered the primary input device, used for typing text and commands. Its design and layout, like the QWERTY configuration, are standardized for ease of use.

The mouse is another essential input device, facilitating point-and-click interactions with a graphical user interface. It may include buttons and a scroll wheel for extended functionalities. In contrast, touchscreens offer a more direct method of interaction by recognizing touch gestures directly on the display.

Scanners convert physical documents and images into digital format, enabling digital archiving and processing. They come in various forms, including flatbed and sheet-fed varieties, and use optical technology to capture the information.

List of Common Input Devices:

- Keyboard

- Mouse

- Touchscreen

- Scanner

These devices connect to the system through various interfaces, such as USB ports or wireless connections, providing a means for information to enter the computer’s processing unit.

It is through these interfaces and devices that a computer receives most of its directive input, allowing it to carry out tasks and process information as required by the user. The effective use of these devices is critical to the functionality and efficiency of computer systems.

Advancements in Computer Architecture

The field of computer architecture has seen significant transformations that have fueled innovation across various technological domains. These advancements have led to improvements in efficiency, speed, and the broadening of computing applications.

Evolution of Processor Architectures

Processor architectures have undergone major changes, with early models like the PDP-8/E minicomputer laying the groundwork for more complex systems. Today’s modern CPUs, including the x86-64 processor, exhibit remarkable capabilities compared to their predecessors. For instance, the shift from 32-bit to 64-bit computing in processors has allowed for a massive increase in the memory that systems can address, enhancing the performance of both software and hardware.

As CPU technology advanced, multi-core processors became standard. These CPUs perform multiple tasks simultaneously, significantly improving multitasking and computational power. Additionally, specialized architectures like those found in graphics processing units (GPUs) have advanced to perform complex calculations faster than traditional CPUs.

Computer architecture isn’t static; it continuously evolves to meet the demands of new software and technologies. Innovations in architecture, like multi-threading and hyper-threading, have been instrumental in optimizing computational tasks and resource utilization.

Embedded Systems and Modern Computer Types

The rise of embedded systems exemplifies the tailor-made approach that modern computer architectures have adopted. These systems are integral to a broader range of devices, from simple household appliances to sophisticated industrial machinery. They are designed to perform specific tasks and are built into the fabric of larger systems, often with real-time computing constraints.

IoT, or the Internet of Things, has been one of the beneficiaries of advancements in embedded systems, where a plethora of devices and objects are interconnected and exchange data autonomously. The miniaturization and integration of components have enabled the creation of smart devices that are efficient, connected, and increasingly intelligent.

This customized approach enables systems to be optimized for the specific needs of an application, improving not just performance but also energy efficiency, a crucial aspect in today’s environmentally conscious world.

In conclusion, advancements in computer architecture are not only characterized by the progression of processing power and miniaturization but also by the ability to fit computing technology into a growing variety of applications. As we move forward, we can expect these trends to continue, fueled by ongoing innovation and the ever-increasing demands of the technology landscape.

Memory Hierarchy and Performance

In computing, performance is heavily influenced by memory hierarchy, a structured layering of various storage types based on speed and cost.

Cache Memory and Its Types

Cache memory serves as a high-speed buffer between the CPU and RAM. It stores frequently accessed data to quickly fulfill future requests. There are several types of cache memory characterized by their proximity to the CPU:

- L1 Cache: Embedded directly in the CPU chip, L1 cache has the fastest access times but limited capacity.

- L2 Cache: Usually located on the CPU chip or in close proximity, L2 cache is larger than L1 but slower.

- L3 Cache: Found on the motherboard or CPU chip, L3 cache is slower and larger than both L1 and L2, and it supports multi-threading by holding shared data for the processor cores.

Comparing Memory Speeds and Costs

When comparing the memory hierarchy, there is a trade-off between speed and cost:

- Primary Memory (RAM): Faster than secondary storage but slower and more expensive than cache memory.

- Secondary Memory: Includes devices like HDDs and SSDs which provide larger storage at lower cost but with slower access speeds than RAM or cache.

The hierarchy ensures that the most frequently used data is quickly accessible, improving overall system performance while balancing costs.

Computer Maintenance and Environment

Proper maintenance and environmental controls are crucial in ensuring the longevity and reliability of computer systems.

Humidity Control and Equipment Protection

Computers and other electronic equipment require a controlled environment to function optimally. The correct humidity range for computer equipment is generally between 45% to 60% relative humidity. Maintaining this range prevents static electricity build-up, which can damage components, and also wards off condensation that can cause corrosion or electrical shorts. It is necessary to regularly monitor the humidity levels in environments where computers are operating to safeguard the equipment and ensure consistent performance.

The Importance of Technical Standards

Technical standards ensure interoperability and compatibility in technology ecosystems, fostering efficient communication, safety, and reliability across devices and networks.

Protocol Standards and Their Significance

Protocol standards are the backbone of network communication, ensuring that data packets transmitted across networks are done so efficiently, securely, and reliably. These standards, such as TCP/IP for internet communication or HTTP for web traffic, are maintained by organizations like the Internet Engineering Task Force (IETF) to ensure that different devices can understand and exchange information regardless of their underlying hardware or software. The significance of protocol standards lies in their ability to unify communication, allowing a seamless and global reach of network services. Without these agreed-upon sets of rules, the connectivity and functionality of the internet and other networks as we know them would be compromised.

Frequently Asked Questions

In exploring the intricacies of low-level programming languages, one can find detailed answers to common queries regarding the functionality and components of computers.

What are the four stages of the instruction cycle in low level programming?

The instruction cycle, also known as the machine cycle, consists of four fundamental stages: fetching the instruction from memory, decoding the instruction, executing the instruction, and then storing the resulting data. This cycle is crucial for the processor to carry out program instructions.

What is the fundamental unit of data storage in computing?

The fundamental unit of data storage in computing is the bit. A bit can have a value of either 0 or 1. Bytes, which are composed of eight bits, serve as the basic building blocks for more complex data storage structures.

What is the common name for the chassis that houses computer components?

The common name for the chassis that contains the core components of a computer is the computer case or tower. It provides a protected enclosure for the motherboard, processor, memory, and other hardware.

What is the recommended humidity range for maintaining computer equipment?

The recommended humidity range for computer equipment to ensure safe and reliable operation is typically between 45% and 60%.

What type of memory is the fastest and most costly in a computer system?

The fastest and most expensive type of memory in a computer system is the Central Processing Unit’s (CPU) cache memory. It is designed to speed up the transfer of data and instructions between the CPU and the main memory.

What role do auxiliary storage devices serve in a computer system?

Auxiliary storage devices are used for permanent data storage and backups, expanding the limited capacity of a computer’s primary memory. They enable users to store large amounts of data long-term, such as on hard drives, solid-state drives, or optical media.